What is the difference between concurrent programming and parallel programming?

Concurrent programming regards operations that appear to overlap and is primarily concerned with the complexity that arises due to non-deterministic control flow. The quantitative costs associated with concurrent programs are typically both throughput and latency. Concurrent programs are often IO bound but not always, e.g. concurrent garbage collectors are entirely on-CPU. The pedagogical example of a concurrent program is a web crawler. This program initiates requests for web pages and accepts the responses concurrently as the results of the downloads become available, accumulating a set of pages that have already been visited. Control flow is non-deterministic because the responses are not necessarily received in the same order each time the program is run. This characteristic can make it very hard to debug concurrent programs. Some applications are fundamentally concurrent, e.g. web servers must handle client connections concurrently. Erlang, F# asynchronous workflows and Scala's Akka library are perhaps the most promising approaches to highly concurrent programming.

Multicore programming is a special case of parallel programming. Parallel programming concerns operations that are overlapped for the specific goal of improving throughput. The difficulties of concurrent programming are evaded by making control flow deterministic. Typically, programs spawn sets of child tasks that run in parallel and the parent task only continues once every subtask has finished. This makes parallel programs much easier to debug than concurrent programs. The hard part of parallel programming is performance optimization with respect to issues such as granularity and communication. The latter is still an issue in the context of multicores because there is a considerable cost associated with transferring data from one cache to another. Dense matrix-matrix multiply is a pedagogical example of parallel programming and it can be solved efficiently by using Straasen's divide-and-conquer algorithm and attacking the sub-problems in parallel. Cilk is perhaps the most promising approach for high-performance parallel programming on multicores and it has been adopted in both Intel's Threaded Building Blocks and Microsoft's Task Parallel Library (in .NET 4).

If you program is using threads (concurrent programming), it's not necessarily going to be executed as such (parallel execution), since it depends on whether the machine can handle several threads.

Here's a visual example. Threads on a non-threaded machine:

-- -- --

/ \

>---- -- -- -- -- ---->>

Threads on a threaded machine:

------

/ \

>-------------->>

The dashes represent executed code. As you can see, they both split up and execute separately, but the threaded machine can execute several separate pieces at once.

https://joearms.github.io/published/2013-04-05-concurrent-and-parallel-programming.html

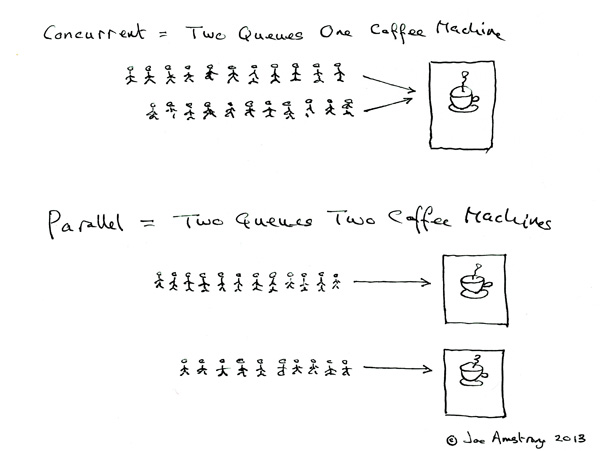

Concurrent = Two queues and one coffee machine.

Parallel = Two queues and two coffee machines.